This comprehensive guide delves into the realm of SQL beyond the fundamentals, revealing a world of powerful techniques for extracting and manipulating data. We’ll explore advanced query techniques, data manipulation, database design, and optimization strategies, ultimately empowering you to harness the full potential of SQL.

From intricate subqueries and joins to managing complex data transformations and optimizing query performance, this resource provides a structured and detailed approach to SQL mastery. The guide covers essential topics such as DML, DDL, stored procedures, date/time manipulation, and advanced data analysis, making it an invaluable resource for developers and data professionals alike.

Advanced Query Techniques

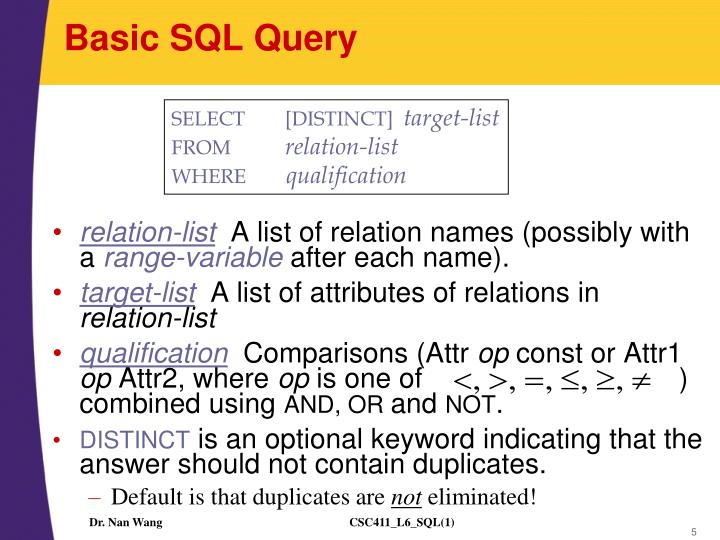

SQL, beyond basic queries, unlocks the power to extract intricate details and perform sophisticated data manipulations. Mastering advanced techniques like subqueries, joins, aggregate functions, and window functions empowers users to create powerful and insightful analyses from their databases. This section dives into these techniques, demonstrating their application and providing examples to solidify understanding.

Subqueries

Subqueries are queries nested within another query. They are valuable for complex filtering, calculations, and data retrieval, often used to generate data that will be used by the outer query. They allow for more refined and dynamic data extraction.

Subqueries can be used in various clauses of an SQL statement, including the WHERE, SELECT, and FROM clauses. They are particularly useful when you need to filter data based on the results of another query.

Example: Find all customers who have placed orders with a total amount exceeding the average order value.

“`sqlSELECT customerIDFROM OrdersWHERE orderTotal > (SELECT AVG(orderTotal) FROM Orders);“`

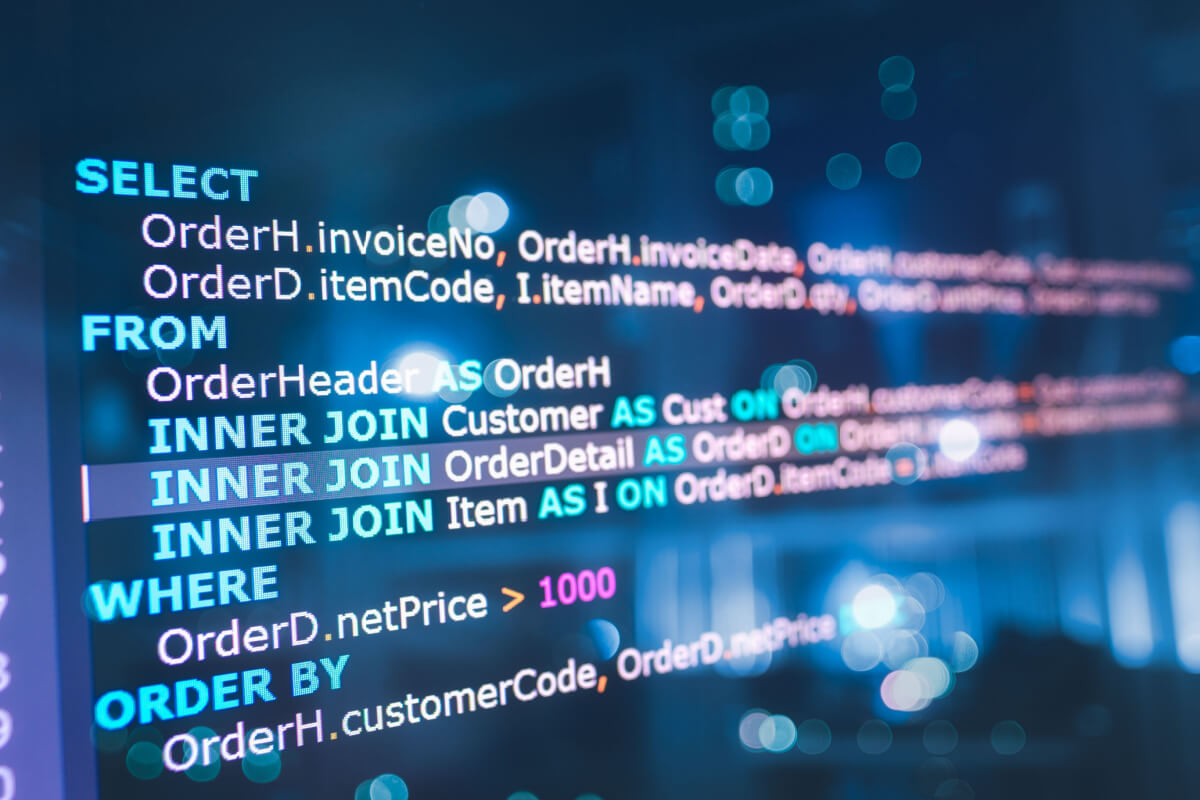

Joins

Joins combine data from multiple tables based on related columns. They are essential for retrieving information from interconnected datasets, enabling the creation of comprehensive reports and analyses.

- Inner Joins: Retrieve rows where the join condition is met in both tables. This is the most common type of join, returning only matching records. For instance, if you want to find customers who have placed orders, you would use an inner join between the customers and orders tables.

- Outer Joins (Left, Right, Full): Retrieve all rows from one table (left or right) and matching rows from the other table. Left joins return all rows from the left table, and matching rows from the right table. Right joins return all rows from the right table, and matching rows from the left table. Full outer joins return all rows from both tables, whether there’s a match or not.

- Self Joins: A join of a table to itself. This is used when you want to relate rows within the same table based on a common attribute. For example, finding employees who report to another employee within the same company.

Effective join usage requires careful consideration of the relationship between tables and the specific data you want to retrieve.

Aggregate Functions

Aggregate functions perform calculations on a set of values and return a single value. They are commonly used to summarize and analyze data.

- COUNT: Counts the number of rows or values in a column.

- SUM: Calculates the sum of values in a column.

- AVG: Calculates the average of values in a column.

- MAX: Returns the maximum value in a column.

- MIN: Returns the minimum value in a column.

Example: Calculate the total revenue generated by all products.

“`sqlSELECT SUM(price

quantity) AS totalRevenue

FROM Products;“`

Window Functions

Window functions perform calculations across a set of rows related to the current row. They are useful for ranking, partitioning, and calculating running totals or averages within a dataset.

Example: Rank products based on their sales, partitioned by category.

“`sqlSELECT productID, category, sales,RANK() OVER (PARTITION BY category ORDER BY sales DESC) as salesRankFROM ProductSales;“`

Join Types Comparison

| Join Type | Functionality | When to Use |

|---|---|---|

| Inner Join | Returns rows where the join condition is met in both tables. | Retrieving data from multiple tables based on a common column, where only matching rows are needed. |

| Left Outer Join | Returns all rows from the left table and matching rows from the right table. | Retrieving all records from one table, even if there is no match in the other table. |

| Right Outer Join | Returns all rows from the right table and matching rows from the left table. | Similar to left outer join but prioritizes the right table. |

| Full Outer Join | Returns all rows from both tables, whether there’s a match or not. | Retrieving all data from both tables, regardless of matching records. |

Working with Data Manipulation Language (DML)

Data manipulation is a crucial aspect of database management. It involves the process of modifying existing data within a database. This section delves into efficient data insertion, updating, and deletion techniques, along with crucial considerations for managing these operations effectively. Understanding transactions, error handling, and data validation is vital for maintaining data integrity and consistency.Data manipulation operations, encompassing insertion, update, and deletion, are fundamental to database management.

Efficient execution and careful handling of these operations are critical to maintaining data accuracy and reliability within a database system. The implementation of transactions, error handling mechanisms, and data validation techniques all contribute significantly to the overall integrity of the database. Furthermore, strategies for safely updating large datasets are explored to minimize disruptions and ensure data consistency.

Data Insertion

Efficient insertion of data into a database is paramount. Prepared statements, parameterized queries, and bulk insert techniques are frequently employed to enhance performance. Using these methods minimizes the risk of SQL injection vulnerabilities and boosts insertion speed, particularly when dealing with large datasets. For instance, using prepared statements avoids the need to repeatedly parse the same SQL query, improving query execution time.

Data Updates

Updating existing data requires careful consideration. Identifying the target records accurately and ensuring data integrity are essential steps. Conditional updates, allowing modifications only to specified records based on conditions, are frequently employed to achieve targeted updates without affecting unintended records. For example, updating customer information requires verification to ensure data consistency and avoid inconsistencies.

Data Deletion

Deleting data from a database table must be executed judiciously. Carefully defining criteria to isolate the records to be deleted is crucial to prevent unintended data loss. Using `WHERE` clauses to specify deletion criteria and employing appropriate error handling are important for data integrity and system stability. For instance, deleting outdated records should be done only after validating that there are no active references to these records.

Transactions

Transactions provide a mechanism for managing multiple DML operations as a single logical unit of work. They ensure data consistency by guaranteeing that either all operations within a transaction succeed or none of them do. This crucial feature prevents partial updates or inconsistencies that could result from failures during complex data modification processes. Using transactions is critical in scenarios where multiple updates need to be applied in a synchronized manner.

For instance, transferring funds between accounts requires a transaction to guarantee that both accounts are updated correctly.

Error Handling

Error handling is crucial to maintain the stability and reliability of DML operations. Robust error handling mechanisms catch potential issues such as constraint violations, data type mismatches, and connection failures. These mechanisms allow for graceful recovery from errors and prevent data corruption or system crashes. For example, catching constraint violations can alert the system to data integrity problems.

Updating Large Datasets

Updating a large dataset requires a phased approach to avoid performance issues and data inconsistencies. Strategies for dividing the update into smaller, manageable chunks are vital to avoid performance bottlenecks. Batch processing and incremental updates are techniques that are frequently employed to efficiently update large datasets. Implementing these techniques minimizes the impact of the update on the overall system performance and data consistency.

For instance, updating millions of records should be done in batches, rather than trying to update all records at once.

Data Validation and Constraints

Data validation and constraints play a crucial role in ensuring data integrity. These mechanisms restrict the types of data that can be entered into the database, enforcing consistency and accuracy. Data constraints ensure that the data conforms to predefined rules, preventing errors and inconsistencies. For example, enforcing a constraint to ensure a field only accepts positive numbers prevents data entry errors.

Summary of Data Manipulation Techniques

| Operation | Description | Use Cases |

|---|---|---|

| INSERT | Adds new data to a table. | Adding new records, populating tables. |

| UPDATE | Modifies existing data in a table. | Correcting errors, updating information. |

| DELETE | Removes data from a table. | Removing outdated records, cleaning data. |

Data Definition Language (DDL) and Database Design

q(80)/cmrs428c994c73fph3jg.webp)

Data Definition Language (DDL) statements are fundamental for establishing and managing the structure of a relational database. They define the database schema, including tables, constraints, and indexes, crucial for organizing and accessing data efficiently. Understanding DDL is essential for creating well-structured databases capable of handling complex data relationships.

Creating and Managing Tables

DDL enables the creation of tables with specific attributes and data types. Defining primary keys ensures data uniqueness, while foreign keys establish relationships between tables. Indexes enhance query performance by accelerating data retrieval. Mastering these concepts is essential for building robust and scalable database systems.

- Primary Keys: Primary keys uniquely identify each record within a table. They prevent duplicate entries and are crucial for data integrity. For example, in a customer table, a primary key could be a unique customer ID.

- Foreign Keys: Foreign keys establish relationships between tables. They link records in one table to records in another, enforcing referential integrity. For instance, an order table might have a foreign key referencing a customer table, linking each order to a specific customer.

- Indexes: Indexes speed up data retrieval by creating pointers to data. They are particularly useful for large tables and frequently queried columns. For instance, an index on the customer name column would allow for faster searches based on customer names.

Modifying Existing Database Schemas

DDL statements allow for modifications to existing database structures. These changes include altering table definitions, adding new columns, or dropping existing ones. Careful consideration is required to avoid data inconsistencies and ensure data integrity.

- ALTER TABLE: The `ALTER TABLE` statement is used to modify an existing table. Examples include adding new columns, changing data types, or modifying constraints.

- DROP TABLE: The `DROP TABLE` statement removes an entire table from the database. Use with caution as it permanently deletes all data associated with the table.

- TRUNCATE TABLE: The `TRUNCATE TABLE` statement removes all rows from a table, leaving the table structure intact. This is generally faster than `DELETE FROM` but does not log the operation.

Designing Tables with Appropriate Data Types and Constraints

Selecting the appropriate data type for each column is crucial for data integrity and storage efficiency. Constraints ensure data accuracy and consistency. Proper data type and constraint selection minimizes errors and ensures that data is stored and accessed effectively.

- Data Types: Choosing appropriate data types, such as `INT`, `VARCHAR`, `DATE`, `DECIMAL`, significantly impacts storage space and query performance. Using the correct data type ensures data accuracy and consistency.

- Constraints: Constraints, such as `NOT NULL`, `UNIQUE`, `CHECK`, `DEFAULT`, enforce specific rules on the data stored in the table. These constraints maintain data integrity and consistency, ensuring accurate data representation.

Normalization Techniques for Relational Database Design

Normalization is a crucial technique for organizing data in relational databases. It reduces data redundancy and improves data integrity. By applying normalization rules, database designers ensure data consistency and efficiency.

- Normalization Rules: Normalization techniques, such as first, second, and third normal forms, are crucial for reducing data redundancy and improving data integrity. These rules systematically eliminate redundant data, improving the overall structure and efficiency of the database.

Examples of Creating Complex Table Structures

Designing complex tables involves careful consideration of relationships between entities. These relationships are often depicted through diagrams.

Example: A `Products` table with columns for `productID` (primary key), `productName`, `description`, and `price`, along with a `Categories` table with columns for `categoryID` (primary key) and `categoryName`. A foreign key constraint in the `Products` table referencing the `Categories` table would establish the relationship between products and their categories.

DDL Statements and Their Purposes

| DDL Statement | Purpose |

|---|---|

| CREATE TABLE | Creates a new table in the database. |

| ALTER TABLE | Modifies an existing table. |

| DROP TABLE | Deletes a table from the database. |

| TRUNCATE TABLE | Removes all rows from a table. |

| CREATE INDEX | Creates an index on a table column. |

Stored Procedures and Functions

Stored procedures and functions are powerful tools in SQL that enhance code reusability, efficiency, and security. They encapsulate a set of SQL statements within a defined block, allowing for organized and repeatable execution. This modular approach simplifies database management and maintenance.By encapsulating logic within stored procedures and functions, developers can improve code maintainability and reduce errors. Changes to the underlying logic only need to be made in one place, which can significantly decrease the risk of introducing bugs or inconsistencies.

Creating and Using Stored Procedures

Stored procedures are precompiled sets of SQL statements that can be stored and executed repeatedly. They are particularly useful for frequently used tasks or complex operations. Creating a stored procedure involves defining a name, parameters (if any), and the SQL statements to be executed. Once created, the procedure can be called by specifying its name and passing any required parameters.Example: A stored procedure to calculate the total sales for a given product category within a specific time frame could be written as follows:“`sqlCREATE PROCEDURE CalculateTotalSales @CategoryName VARCHAR(50), @StartDate DATE, @EndDate DATEASBEGIN SELECT SUM(SalesAmount) AS TotalSales FROM Sales WHERE Category = @CategoryName AND OrderDate BETWEEN @StartDate AND @EndDate;END;“`This procedure takes three parameters: the category name, start date, and end date.

It then returns the total sales for that category within the specified period. To execute it, a user would call it with the appropriate values:“`sqlEXEC CalculateTotalSales ‘Electronics’, ‘2023-01-01’, ‘2023-03-31’;“`

Creating and Using Functions

Functions, unlike stored procedures, typically return a single value or a set of values. They are ideal for performing complex calculations or data transformations. They are often used within queries to incorporate pre-calculated results directly.Example: A function to calculate the discount amount for a given order could be written as follows:“`sqlCREATE FUNCTION CalculateDiscount (@OrderTotal DECIMAL(18, 2))RETURNS DECIMAL(18, 2)ASBEGIN DECLARE @Discount DECIMAL(18, 2); IF @OrderTotal > 1000 SET @Discount = @OrderTotal – 0.1; ELSE SET @Discount = 0; RETURN @Discount;END;“`This function takes the order total as input and returns the discount amount.

The function can be used within a query like this:“`sqlSELECT OrderID, OrderTotal, dbo.CalculateDiscount(OrderTotal) AS DiscountAmountFROM Orders;“`

Improving Efficiency and Security

Stored procedures and functions significantly improve database efficiency by pre-compiling the code. This pre-compilation minimizes execution time compared to executing the same SQL statements directly. Furthermore, they enhance security by encapsulating database logic, restricting direct access to SQL statements, and promoting better access control.

Best Practices for Designing Efficient Stored Procedures

Designing efficient stored procedures involves several best practices. These include using appropriate data types, minimizing network traffic by returning only necessary data, and avoiding unnecessary computations. Efficient procedures are vital for optimized database performance and a positive user experience.

Comparison of Stored Procedures and Functions

| Feature | Stored Procedure | Function |

|---|---|---|

| Return Value | Multiple values or no value | Single value or set of values |

| Usage | Complex operations, updates, and sets of queries | Calculations, transformations, or values |

| Execution | Execute a set of SQL statements | Calculate a value or return a set of values |

Optimizing SQL Queries

Efficient query execution is crucial for the performance of any database-driven application. Poorly optimized queries can lead to significant delays in response times, impacting user experience and overall system efficiency. This section explores techniques for identifying and resolving slow-running queries, utilizing indexes for performance enhancement, and employing various optimization strategies. It also details the use of query profiling tools to analyze execution plans, highlighting different methods for optimizing query performance across diverse scenarios.Understanding the intricacies of query optimization is paramount for maintaining a responsive and high-performing database system.

Optimizing SQL queries involves a systematic approach to identify bottlenecks and implement solutions that enhance query efficiency, thereby improving the overall application performance.

Identifying Slow-Running Queries

Query performance issues can manifest in various ways, from noticeable delays in retrieving data to application freezes. A critical step in optimizing queries is identifying the problematic ones. Tools and techniques exist for locating and analyzing slow-running queries. Database management systems (DBMS) often provide features to track query execution time, allowing users to pinpoint the queries that are consuming excessive resources.

Using Indexes to Speed Up Query Performance

Indexes significantly enhance query performance by allowing the database to quickly locate specific data rows without needing to scan the entire table. An index is a pointer to data in a table. They act like an index in a book, allowing you to quickly find specific information. A well-designed index can drastically improve query speed. Effective index creation requires careful consideration of the query patterns used frequently in the application.

Indexes can be single-column or composite (multi-column).

Query Optimization Strategies

Several strategies can enhance query performance. Utilizing appropriate data types and avoiding unnecessary joins are crucial. Optimizing joins by using the correct join type, for example, an inner join instead of a full outer join when not necessary, improves efficiency. Carefully crafting query logic is essential, including optimizing WHERE clauses and minimizing the use of subqueries. Avoid using functions or calculations within the WHERE clause.

Using Query Profiling Tools to Analyze Query Execution Plans

Query profiling tools provide valuable insights into how queries execute, including details about the query plan and resource usage. Analyzing the query execution plan can pinpoint bottlenecks and suggest areas for optimization. Profiling tools often visualize the query execution plan, making it easier to understand the steps taken by the database to execute the query.

Methods for Optimizing Query Performance in Different Scenarios

Optimization strategies vary depending on the specific scenario. For example, optimizing queries involving large datasets requires different techniques than those for smaller tables. In the case of large datasets, partitioning the table or using clustered indexes may significantly improve performance. For frequently queried data, creating appropriate indexes is crucial.

Table Comparing Various Query Optimization Techniques

| Optimization Technique | Description | Benefits | Considerations |

|---|---|---|---|

| Index Creation | Creating indexes on frequently queried columns. | Faster data retrieval. | Increased storage space, potential impact on write operations. |

| Query Rewriting | Refactoring the query to a more efficient form. | Improved query performance. | Requires understanding of query execution plans. |

| Data Partitioning | Dividing large tables into smaller, more manageable partitions. | Faster query execution for specific partitions. | Complexity in managing partitions. |

| Using Appropriate Joins | Employing the most efficient join type for the query. | Reduced resource consumption. | Understanding of join types and their implications. |

Working with Dates and Times

Dates and times are fundamental components in many database applications. Efficient handling of these values is crucial for tasks like tracking events, calculating durations, and reporting on historical trends. SQL provides various data types and functions to effectively manage date and time information.

Date and Time Data Types

Different SQL implementations offer a range of date and time data types. Understanding these types is essential for storing and manipulating data accurately. Common types include DATE, TIME, DATETIME, TIMESTAMP, and potentially others depending on the specific database system.

- DATE: Stores only the date part (year, month, day) without any time component.

- TIME: Stores only the time part (hours, minutes, seconds) without any date component.

- DATETIME: Stores both date and time components. The exact format and precision vary depending on the database system. This type often stores values in a single column.

- TIMESTAMP: Similar to DATETIME, but often includes a time zone component. TIMESTAMP values typically store the exact moment in time, often including time zone information, and this data can be helpful for global applications.

Manipulating Dates and Times with SQL Functions

SQL provides a rich set of functions to manipulate date and time values. These functions enable tasks like extracting parts of a date, calculating differences between dates, and formatting dates for display.

- Extracting Date Parts: Functions like `YEAR()`, `MONTH()`, `DAY()`, `HOUR()`, `MINUTE()`, `SECOND()` can extract specific components from a date or time value.

- Example: To get the year from a date in the ‘orders’ table, use `YEAR(order_date)`.

- Calculating Differences: Functions like `DATEDIFF()` allow for calculating the difference between two date or time values. This is useful for determining durations or intervals.

- Example: `DATEDIFF(day, order_date, current_date)` calculates the number of days between the ‘order_date’ and the current date.

- Formatting Dates: Functions like `DATE_FORMAT()` allow for converting date and time values into specific formats, making them suitable for presentation.

- Example: To display an order date in ‘YYYY-MM-DD’ format, use `DATE_FORMAT(order_date, ‘%Y-%m-%d’)`.

Calculations on Date and Time Values

Calculations on date and time values are common in various applications. SQL provides functions to perform arithmetic operations on dates and times.

- Adding/Subtracting Time Intervals: Use functions like `DATE_ADD()` and `DATE_SUB()` to add or subtract intervals (days, months, years) from a date or time.

- Example: `DATE_ADD(order_date, INTERVAL 7 DAY)` adds seven days to the ‘order_date’.

- Determining Time Intervals: Functions like `DATEDIFF()` allow you to calculate the difference between two dates or times in specific units (days, hours, minutes, etc.).

- Example: `DATEDIFF(HOUR, order_start_time, order_end_time)` calculates the difference in hours between two timestamps.

Handling Time Zones

Handling time zones is crucial for applications with users or data spread across different time zones. SQL often has features to store time zone information or convert between time zones.

- Storing Time Zone Information: Some databases allow you to store time zone information directly with the date and time value, or you might use a separate column to store the time zone. This helps to maintain consistency and accuracy.

- Converting Between Time Zones: Use database-specific functions to perform conversions. These functions usually require both the timestamp and the target time zone.

Date and Time Functions Summary

| Function | Functionality |

|---|---|

| `DATE_FORMAT()` | Formats a date or time value. |

| `YEAR()`, `MONTH()`, `DAY()` | Extracts year, month, and day components. |

| `HOUR()`, `MINUTE()`, `SECOND()` | Extracts hour, minute, and second components. |

| `DATEDIFF()` | Calculates the difference between two dates or times. |

| `DATE_ADD()`, `DATE_SUB()` | Adds or subtracts an interval from a date or time. |

Advanced Data Analysis Techniques

Leveraging SQL beyond basic querying opens a world of possibilities for extracting valuable insights from data. Advanced techniques enable complex aggregations, report generation, data mining, and the creation of custom metrics. This allows for a deeper understanding of trends, patterns, and anomalies within datasets, transforming raw data into actionable knowledge.SQL’s power extends far beyond simple data retrieval. By mastering complex aggregations, grouping, and reporting capabilities, users can uncover hidden patterns and relationships within their data.

This is crucial for informed decision-making in diverse fields, from business analytics to scientific research.

Complex Aggregations and Group By Operations

SQL’s `GROUP BY` clause, combined with aggregate functions like `SUM`, `AVG`, `COUNT`, `MAX`, and `MIN`, empowers the creation of insightful summaries. These functions allow for concise analysis of data subsets, generating meaningful statistics for specific groups or categories. Understanding how to effectively utilize these functions is critical for extracting relevant information from large datasets.

- The `GROUP BY` clause organizes data into groups based on specified columns, enabling the application of aggregate functions to each group independently. For example, grouping sales data by product category allows for calculating total sales for each category.

- Aggregate functions are applied to each group, producing summary statistics for that group. For example, `AVG(price)` calculates the average price for each product category.

- Combining these techniques allows for intricate analyses. For instance, calculating the average order value for each customer segment, further categorized by geographic region.

Generating Reports and Dashboards

SQL plays a pivotal role in report generation and dashboard development. Structured Query Language (SQL) can be used to generate reports, from simple summaries to complex visualizations, based on the data within a database. The reports can be easily transformed into dashboards to provide a dynamic overview of key metrics and trends.

- SQL queries can generate reports in various formats, such as tabular, graphical, or textual. The reports can be tailored to specific needs and audiences, offering a focused view of the data.

- Generated reports can be integrated into dashboards for real-time monitoring and analysis. Dashboards provide a comprehensive view of key performance indicators (KPIs), enabling quick identification of trends and patterns.

- These reports and dashboards can be easily customized to meet specific needs, providing a dynamic and interactive view of the data, allowing users to drill down into specific details and visualize trends over time.

Data Mining and Analysis

Data mining with SQL involves exploring large datasets to identify patterns, anomalies, and relationships. SQL is used for extracting and preparing the data for advanced analysis using tools like machine learning algorithms or statistical methods. These tools can be used to build models for prediction or to understand trends in the data.

- SQL queries can identify trends and patterns within large datasets. For instance, identifying products with high customer returns or discovering relationships between customer demographics and purchase behavior.

- Extracting and preparing data from the database for use in external tools or algorithms is a key part of the data mining process.

- By combining SQL with other analytical tools, users can perform sophisticated analyses, uncover valuable insights, and build predictive models.

Examples of SQL Queries for Complex Data Analysis

SQL queries for complex data analysis can be intricate, utilizing multiple joins, subqueries, and aggregate functions. The following example demonstrates a query to calculate the average order value for each customer segment.“`sqlSELECT c.customer_segment, AVG(o.order_value) AS average_order_valueFROM Customers cJOIN Orders o ON c.customer_id = o.customer_idGROUP BY c.customer_segment;“`

Creating Custom Metrics

Custom metrics are crucial for evaluating specific business aspects. SQL queries can be used to define and calculate these metrics based on existing data, enabling a more nuanced understanding of the data and performance.

- Custom metrics can be created by combining existing data elements or calculations, allowing for a more focused view of specific business aspects.

- These metrics offer a unique perspective on the performance of specific areas or departments within an organization.

Table: Complex Aggregations and Group-by Operations

| Customer Segment | Product Category | Total Sales | Average Order Value |

|---|---|---|---|

| Business | Electronics | $10,000 | $200 |

| Business | Clothing | $5,000 | $100 |

| Consumer | Electronics | $8,000 | $150 |

| Consumer | Clothing | $6,000 | $120 |

This table showcases the output of a complex aggregation and grouping operation, calculating total sales and average order value for each customer segment and product category. The table is just a sample, with the specific metrics depending on the specific business data.

Ending Remarks

This exploration of SQL beyond basic queries has illuminated a wealth of advanced functionalities. We’ve examined techniques for optimizing performance, managing data manipulation, and conducting complex data analysis. By mastering these advanced strategies, you’ll unlock new capabilities in data extraction, manipulation, and reporting, significantly enhancing your database skills and overall efficiency.