Welcome to a practical guide on automating everyday tasks using Python scripts. This comprehensive tutorial will equip you with the knowledge and skills to streamline your workflow, boost efficiency, and save valuable time. We’ll explore various facets of automation, from simple file management to complex web interactions and system tasks, all within the context of Python’s powerful capabilities.

Learn how to leverage Python libraries like `os`, `shutil`, `pathlib`, `requests`, `BeautifulSoup`, and `Pandas` to automate a wide range of activities. Discover the benefits of automating tasks, from enhanced productivity to reduced errors, and gain practical experience through examples and detailed explanations. The guide also emphasizes best practices, error handling, and security considerations for robust and reliable automated scripts.

Introduction to Automation with Python

Automating repetitive tasks is a crucial aspect of modern software development and productivity. Python, with its vast ecosystem of libraries, provides powerful tools for streamlining workflows and freeing up valuable time. This section introduces the fundamental concepts of automating tasks using Python scripts, highlighting the benefits and illustrating a practical example.Python offers numerous advantages for automating tasks. It is a high-level, general-purpose programming language, making it accessible and versatile.

Its readability and extensive libraries simplify the development process, reducing the time and effort required to create sophisticated automation solutions.

Benefits of Automating Tasks with Python

Automating tasks with Python offers several advantages. It boosts efficiency by handling repetitive processes, leading to significant time savings. This frees up human resources to focus on more strategic and creative endeavors. Automation also reduces errors, as tasks are executed consistently without human intervention. Further, it increases consistency in processes, resulting in higher-quality outputs.

A Simple Example: Renaming Files

A basic example of automating a task is renaming files. Consider a scenario where you have numerous files with inconsistent naming conventions. Python scripts can be used to systematically rename these files according to a predefined pattern.“`pythonimport osimport redef rename_files(directory, pattern, replacement): for filename in os.listdir(directory): if re.search(pattern, filename): new_name = re.sub(pattern, replacement, filename) os.rename(os.path.join(directory, filename), os.path.join(directory, new_name))# Example usagerename_files(“my_files”, r”old_(\d+)”, r”new_\1″)“`This script utilizes the `os` and `re` modules.

The `rename_files` function iterates through files in a specified directory. If a file matches the provided regular expression pattern (in this case, files containing “old_” followed by digits), it renames the file using the `re.sub` method. The `\1` in the replacement string ensures that the captured digits are retained in the new name. This is a simple but effective demonstration of Python’s power in automating file management.

Python Libraries for Automation

Python’s robust ecosystem of libraries greatly simplifies automation tasks. These libraries provide pre-built functions and tools for various operations, such as file manipulation, system interactions, and data processing.

- The `os` module provides functions for interacting with the operating system, enabling file system operations like creating, deleting, and renaming files and directories. It’s a foundational library for most automation tasks.

- The `shutil` module offers more advanced file operations, including copying, moving, and deleting files and directories. This module is particularly useful for complex file management tasks.

- The `pathlib` module offers an object-oriented approach to file paths, making it easier to work with files and directories. Its intuitive interface simplifies file system navigation and manipulation.

Comparison of Python Libraries for Automation

The following table compares different Python libraries for automation tasks, highlighting their key features and use cases.

| Library | Description | Use Cases |

|---|---|---|

| `os` | Provides fundamental operating system interactions. | Basic file system operations, such as renaming, creating, and deleting files and directories. |

| `shutil` | Offers advanced file operations, such as copying, moving, and deleting files and directories. | Complex file management tasks, like archiving and backing up files. |

| `pathlib` | Provides an object-oriented approach to file paths. | Improved code readability and maintainability for file system operations, especially in complex scenarios. |

Automating File Management

Automating file management tasks is a crucial aspect of streamlining workflows in various domains. Python provides robust tools for efficiently handling file operations, ranging from simple renaming to complex batch processing. This section explores the capabilities of Python scripting for automating common file management activities, including renaming, copying, moving, and deleting files, along with the use of regular expressions for intricate file name patterns.Python’s versatility allows users to customize scripts to meet specific needs, thereby optimizing file management procedures and reducing manual effort.

This section will detail the process of automating these tasks and offer practical examples.

Renaming Files

Renaming files is a common task, often required when organizing or updating datasets. Python’s `os` module provides functions to rename files with ease. Using this module, scripts can rename files based on pre-defined criteria or patterns, significantly reducing manual effort. The following example demonstrates renaming a file based on a simple pattern:“`pythonimport osimport redef rename_files(directory, pattern, new_pattern): for filename in os.listdir(directory): if re.search(pattern, filename): old_path = os.path.join(directory, filename) new_name = re.sub(pattern, new_pattern, filename) new_path = os.path.join(directory, new_name) os.rename(old_path, new_path) print(f”Renamed filename to new_name”)# Example usage:rename_files(“data”, r”old_(\d+)”, r”new_\1″)“`This code snippet utilizes regular expressions to search for files matching the `old_(\d+)` pattern, replacing “old_” with “new_” and preserving the numerical portion.

This ensures that the file names are consistently updated while retaining crucial information.

Copying and Moving Files

Copying and moving files are common operations in data management. The `shutil` module in Python provides efficient methods for these tasks.“`pythonimport shutilimport osdef copy_files(source_dir, destination_dir): for filename in os.listdir(source_dir): source_path = os.path.join(source_dir, filename) destination_path = os.path.join(destination_dir, filename) shutil.copy2(source_path, destination_path) print(f”Copied filename to destination_dir”)def move_files(source_dir, destination_dir): for filename in os.listdir(source_dir): source_path = os.path.join(source_dir, filename) destination_path = os.path.join(destination_dir, filename) shutil.move(source_path, destination_path) print(f”Moved filename to destination_dir”)# Example Usage (copy)copy_files(“source_folder”, “destination_folder”)# Example Usage (move)move_files(“source_folder”, “destination_folder”)“`This code demonstrates the use of `shutil.copy2` and `shutil.move` for efficient copying and moving of files.

`copy2` preserves metadata, while `move` directly relocates files.

Batch File Processing

Batch file processing involves applying operations to a group of files. This is useful for tasks such as converting files to different formats, extracting data, or applying standardized modifications. A common use case is converting a series of image files from one format to another, or consolidating information from multiple CSV files.“`pythonimport osimport globimport pandas as pddef process_csv_files(directory, output_file): csv_files = glob.glob(os.path.join(directory, “*.csv”)) all_data = [] for csv_file in csv_files: df = pd.read_csv(csv_file) all_data.append(df) combined_df = pd.concat(all_data, ignore_index=True) combined_df.to_csv(output_file, index=False)process_csv_files(“data”, “combined_data.csv”)“`This example uses the `glob` module to find all CSV files in a directory and `pandas` to combine them into a single CSV file.

This approach is highly versatile and can be tailored for different file types and data processing needs.

Regular Expressions for File Names

Regular expressions are invaluable for complex file name patterns. They allow for powerful and flexible matching of filenames.“`pythonimport reimport osdef find_files(directory, pattern): matching_files = [] for filename in os.listdir(directory): if re.match(pattern, filename): matching_files.append(filename) return matching_filesfiles_found = find_files(“data”, r”report_\d4-\d2-\d2.txt”)print(files_found)“`This code snippet utilizes regular expressions to find files matching a specific pattern, such as “report_YYYY-MM-DD.txt”.

This capability is critical for automated file management tasks.

File Operations Summary

| Operation | Description | Python Code Snippet |

|---|---|---|

| File Renaming | Renames files based on a pattern | os.rename(), regular expressions |

| File Copying | Copies files to a new location | shutil.copy2() |

| File Moving | Moves files to a new location | shutil.move() |

| Batch CSV Processing | Combines multiple CSV files into one | glob, pandas |

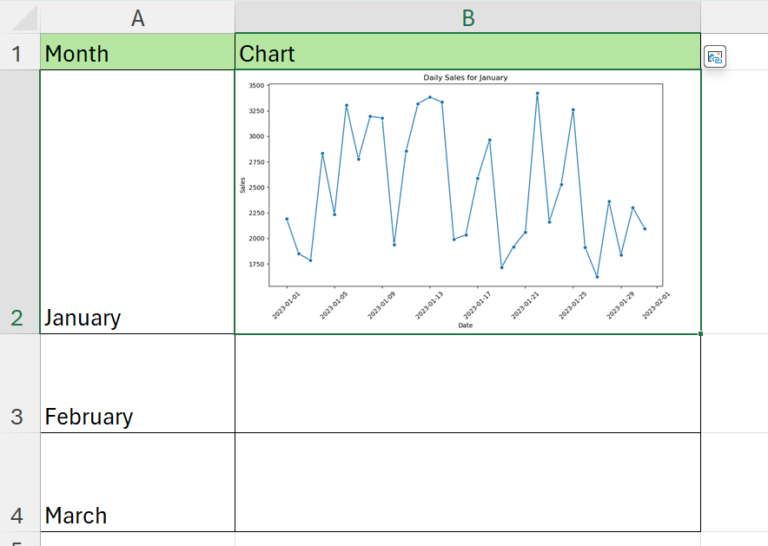

Automating Data Processing

Python excels at automating data processing tasks, a crucial aspect of data science and analysis. By leveraging libraries like Pandas, you can streamline data cleaning, transformation, and analysis, freeing up valuable time and resources for more strategic endeavors. Automation of these processes not only improves efficiency but also reduces the risk of human error, ensuring consistent and reliable results.

Data Cleaning and Transformation

Data often requires cleaning and transformation before analysis. This process involves handling missing values, correcting inconsistencies, and formatting data into a usable structure. Python’s Pandas library offers powerful tools for these tasks, making the automation process straightforward and efficient. These processes are vital for preparing data for effective analysis.

Using Pandas for Data Manipulation

Pandas is a cornerstone library for data manipulation in Python. It provides DataFrames, which are tabular data structures, enabling efficient handling of various data formats. DataFrames facilitate operations like filtering, sorting, grouping, and aggregation. Pandas’ ability to handle missing values, convert data types, and perform calculations makes it a crucial tool for automating data processing workflows.

Automating Data Extraction

Python’s versatility extends to extracting data from diverse sources. Scripts can be crafted to automate the retrieval of data from databases, APIs, web pages, and files (like CSV or JSON). This automation streamlines the data acquisition process, enabling a more focused approach to analysis. Automation eliminates the manual steps involved in data retrieval, saving considerable time and resources.

Example: Extracting and Cleaning Data from a CSV File

“`pythonimport pandas as pddef clean_csv_data(file_path): try: df = pd.read_csv(file_path) # Handle missing values (replace with mean for numerical columns) numerical_cols = df.select_dtypes(include=[‘number’]).columns for col in numerical_cols: df[col].fillna(df[col].mean(), inplace=True) # Convert ‘Date’ column to datetime if it exists if ‘Date’ in df.columns: df[‘Date’] = pd.to_datetime(df[‘Date’]) # Remove rows with empty or invalid values in specific columns df = df[df[‘Column1’].notna()] df = df[df[‘Column2’].notna()] # Example: Remove rows with NaN in ‘Column2’ return df except FileNotFoundError: print(f”Error: File not found at file_path”) return None except pd.errors.EmptyDataError: print(f”Error: File is empty at file_path”) return None except Exception as e: print(f”An error occurred: e”) return None# Example usage:file_path = ‘data.csv’ # Replace with your CSV file pathcleaned_data = clean_csv_data(file_path)if cleaned_data is not None: print(cleaned_data.head())“`This script demonstrates a basic approach to cleaning a CSV file using Pandas.

It addresses missing values, converts dates to datetime objects, and removes rows with problematic data. Adjust the code based on your specific data format and cleaning requirements.

Handling Different Data Formats

Python supports diverse data formats, including CSV, JSON, Excel, and SQL databases. Libraries like Pandas and specialized packages (e.g., `json`, `openpyxl`) offer functions to read and process these formats. Each format presents its own characteristics, demanding tailored handling techniques. For instance, CSV files often require specific delimiters, while JSON files need parsing for structured data. Knowing how to adapt to various data structures is critical for comprehensive data processing automation.

Automating Web Tasks

Python offers powerful capabilities for automating web-related tasks, extending beyond simple file and data processing. This section explores how Python can interact with websites, from extracting data to submitting forms, and delves into the ethical considerations that accompany such automation. We will be using Python libraries like `requests` and `BeautifulSoup` to demonstrate these techniques.

Web Scraping with Python

Web scraping, the process of extracting data from websites, is a crucial application of automation. Python libraries like `requests` and `BeautifulSoup` facilitate this process. `requests` handles the retrieval of web pages, while `BeautifulSoup` parses the HTML or XML content to extract specific information.

Using Requests and BeautifulSoup

The `requests` library is used to fetch web pages. It provides a simple and efficient way to send HTTP requests and receive responses. `BeautifulSoup` is a powerful tool for parsing HTML and XML documents. It allows you to navigate the document structure and extract specific elements or attributes. This combination allows for targeted data extraction from web pages.

Example Web Scraping Script

The following Python script demonstrates how to scrape data from a sample website (replace with your target URL):“`pythonimport requestsfrom bs4 import BeautifulSoupurl = “https://www.example.com” # Replace with target URLresponse = requests.get(url)response.raise_for_status() # Raise an exception for bad status codessoup = BeautifulSoup(response.content, “html.parser”)# Find all elements with a specific classelements = soup.find_all(“div”, class_=”product-item”)for element in elements: title = element.find(“h3”).text.strip() price = element.find(“span”, class_=”price”).text.strip() print(f”Product: title, Price: price”)“`This script fetches a webpage, parses its HTML content using `BeautifulSoup`, and then extracts product titles and prices from the page, printing them to the console.

Automating Form Submissions

Python can also automate form submissions on websites. This is useful for tasks like filling out surveys, registering on websites, or performing other interactive actions.

Form Submission Example

The following code snippet illustrates how to automate a form submission:“`pythonimport requestsimport jsonurl = “https://www.example.com/submit-form”data = “field1”: “value1”, “field2”: “value2″headers = ‘Content-Type’: ‘application/json’response = requests.post(url, data=json.dumps(data), headers=headers)if response.status_code == 200: print(“Form submitted successfully!”)else: print(f”Error submitting form: response.status_code”)“`This example sends a POST request to a form submission URL with specific data.

Crucially, the code includes error handling to check if the submission was successful.

Ethical Considerations of Web Scraping and Automation

Web scraping and automation tools should be used responsibly and ethically. Respecting robots.txt files, avoiding overloading servers, and adhering to terms of service are vital. Avoid scraping data from sites that explicitly prohibit it. Ensure that you have proper authorization to access and use the data you are scraping.

Automating System Tasks

Python empowers automation of system-level tasks, extending beyond file and data manipulation to encompass scheduling, process execution, and log monitoring. This capability significantly streamlines administrative and operational tasks, freeing up valuable time and reducing the risk of human error.System automation with Python is crucial for maintaining efficient workflows and ensuring consistent performance. This often involves running scripts at predefined intervals, reacting to specific system events, and automating complex sequences of actions.

Automating Task Scheduling

Python’s `schedule` library simplifies the creation of scheduled tasks. This library allows defining tasks to run at specific times or intervals. This automation eliminates manual intervention, ensuring tasks are executed predictably and consistently. The library provides a straightforward syntax for defining recurring tasks, ranging from simple daily executions to complex multi-step processes.

Executing System Commands

The `subprocess` module in Python is vital for interacting with the operating system. It enables the execution of external commands and processes. This is crucial for automating tasks like starting applications, running scripts, or manipulating files and directories. The module offers various methods for handling command output and error conditions, ensuring reliable execution of system-level operations.

Automated File Backup Script

This example demonstrates a Python script that automatically backs up files on a daily schedule.“`pythonimport scheduleimport timeimport subprocessdef backup_files(): # Replace with your backup destination and file paths source_dir = “/path/to/source/files” backup_dir = “/path/to/backup/destination” command = f”rsync -avz source_dir backup_dir” try: subprocess.run(command, shell=True, check=True) print(“Backup successful!”) except subprocess.CalledProcessError as e: print(f”Error during backup: e”)schedule.every().day.at(“02:00”).do(backup_files)while True: schedule.run_pending() time.sleep(1)“`This script utilizes `rsync` for efficient file backups, preventing potential issues with large files.

The `try-except` block handles potential errors during the backup process, providing informative error messages.

Monitoring System Logs

Monitoring system logs for specific events is crucial for proactive issue detection. Python libraries, such as `logging` and `re`, allow extracting and parsing log data to identify errors, warnings, or other significant events. This capability facilitates swift issue resolution and prevents potential system downtime.Example: A script that monitors a log file for errors related to database connections.“`pythonimport loggingimport reimport timedef monitor_log(): log_file = “/path/to/your/log/file” error_pattern = r”Error connecting to database” with open(log_file, “r”) as log: for line in log: if re.search(error_pattern, line): print(f”Error detected: line.strip()”)while True: monitor_log() time.sleep(60) # Check every minute“`This example uses regular expressions to efficiently locate errors in the log file.

Best Practices for System Scripts

Robust system scripts require careful consideration of error handling, logging, and code organization. Explicit error handling using `try-except` blocks prevents unexpected program crashes and provides informative messages to the user. Comprehensive logging facilitates debugging and tracking of script execution. Clear variable naming and modular design improve code readability and maintainability.

Error Handling and Debugging

Robust error handling is crucial for any automated script. It prevents unexpected crashes and ensures your scripts continue to function correctly, even when encountering unexpected data or situations. This section details how to incorporate error handling into your Python scripts, covering different exception types, and provides practical examples in various automation scenarios. Effective debugging strategies are also Artikeld to help identify and resolve issues within your scripts.

Handling Exceptions in Python

Python provides a powerful mechanism for handling exceptions using `try`, `except`, and `finally` blocks. These blocks allow you to gracefully manage potential errors and prevent your script from abruptly terminating. The `try` block encloses the code that might raise an exception. The `except` block specifies the type of exception to handle and the code to execute if that exception occurs.

The `finally` block, optional but often beneficial, ensures specific code runs regardless of whether an exception occurred.

Different Types of Exceptions

Python offers a variety of built-in exceptions for various error scenarios. Understanding these exceptions allows you to write more targeted and effective error handling. Common exceptions include `FileNotFoundError` for file operations, `ValueError` for incorrect data formats, `TypeError` for incompatible data types, `IndexError` for incorrect list indexing, and `ZeroDivisionError` for division by zero. Knowing which exceptions to anticipate is essential for creating robust error handling mechanisms.

Error Handling in File Management

Error handling in file management is critical to ensure your script doesn’t halt due to file-related issues. A file might not exist, be inaccessible, or have the wrong format. Proper error handling mitigates these risks.

- Example: Robust file reading.

- The following script demonstrates how to read a file, handling potential `FileNotFoundError` or `IOError` exceptions.

“`pythondef read_file(filename): try: with open(filename, ‘r’) as file: content = file.read() return content except FileNotFoundError: print(f”Error: File ‘filename’ not found.”) return None except IOError as e: print(f”Error reading file ‘filename’: e”) return None“`This example gracefully handles potential file-related errors, preventing the script from crashing.

It prints informative error messages, allowing the user to diagnose the problem.

Error Handling in Data Processing

Error handling is equally important in data processing tasks. Inconsistent data formats, missing values, or incorrect calculations can cause issues.

- Example: Validating numerical input.

- This script demonstrates how to validate numerical input before processing.

“`pythondef process_data(data): try: value = int(data) # Perform data processing here return value – 2 except ValueError: print(“Error: Invalid numerical input.”) return None“`

Error Handling in Web Tasks

Error handling in web tasks is essential for handling network issues, server errors, or unexpected responses. Using `try-except` blocks can help your script gracefully recover from these problems.

- Example: Fetching data from a website.

- This script demonstrates how to fetch data from a website and handle potential network or server issues.

“`pythonimport requestsdef fetch_web_data(url): try: response = requests.get(url) response.raise_for_status() # Raise HTTPError for bad responses (4xx or 5xx) return response.text except requests.exceptions.RequestException as e: print(f”Error fetching data from url: e”) return None“`

Debugging Automated Scripts

Debugging automated scripts requires a systematic approach. Use print statements strategically to check variable values at different points in your code. Use a debugger (like pdb) to step through your code line by line and inspect variables.

Security Considerations

Automated scripts, while enhancing efficiency, introduce potential security risks if not implemented carefully. Understanding and mitigating these risks is crucial for preventing data breaches, unauthorized access, and system compromise. This section details essential security considerations for automating various tasks, focusing on proactive measures to ensure the safety and integrity of automated processes.Automated scripts often interact with sensitive data and systems.

Improperly designed or implemented scripts can expose this data to malicious actors, leading to significant security vulnerabilities. Consequently, robust security practices are vital to protect against such threats. This section provides actionable strategies to enhance the security posture of automated tasks.

Security Risks Associated with Automation

Automated scripts can introduce several security risks. These include but are not limited to:

- Unintentional Data Exposure: Scripts might inadvertently reveal sensitive data through poorly structured file handling or network interactions. This could include exposing passwords, API keys, or other confidential information.

- Vulnerabilities in External Dependencies: Automated scripts often rely on external libraries or APIs. Security flaws in these external components can expose the entire system to attacks.

- Lack of Input Validation: Failing to validate user input can lead to security vulnerabilities like SQL injection or cross-site scripting (XSS) attacks. Malicious input can disrupt the script or even compromise the underlying system.

- Insufficient Authentication: Automated tasks that interact with sensitive systems or data must implement strong authentication mechanisms to verify the identity of users and processes. Without proper authentication, automated processes can be exploited by malicious actors.

Input Validation and Data Sanitization

Validating user input and sanitizing data is critical to prevent security vulnerabilities. Input validation ensures that data conforms to expected formats and constraints. Data sanitization removes or neutralizes potentially harmful characters or code. These processes are essential to prevent malicious input from compromising automated tasks.

- Data Type Validation: Ensure that input data matches the expected data type. For instance, a script expecting an integer should not accept a string or a floating-point number.

- Range Validation: Validate input data to fall within a specific range. For example, an age input should be within a reasonable range.

- Regular Expression Validation: Employ regular expressions to validate the format of input data, such as email addresses or phone numbers. This helps prevent malicious input patterns.

- Data Sanitization Techniques: Sanitize input data by removing or escaping potentially harmful characters. For example, special characters that could be used in SQL injection attacks.

Secure Practices in Web Scraping

Web scraping, while useful for automating data collection, presents unique security concerns. Robust security measures are necessary to prevent disruption to websites and ensure legal compliance.

- Respecting Robots.txt: Always check the target website’s `robots.txt` file to understand which parts of the site are accessible for scraping. Violating these guidelines can lead to account suspension or legal issues.

- Rate Limiting: Implement rate limiting to avoid overloading the target website’s servers with requests. Excessive requests can lead to the website blocking the script or even initiating security measures.

- Avoiding Brute-Force Attacks: Avoid using brute-force techniques for password recovery or login attempts. These actions can lead to account lockout or legal consequences.

- Using Proper HTTP Headers: Use appropriate HTTP headers to identify the script as a legitimate user agent and avoid triggering security measures.

Secure Practices in System Automation

System automation can expose sensitive system resources. Implementing proper security protocols is crucial for maintaining system integrity.

- Least Privilege Principle: Grant automated scripts only the necessary permissions to perform their tasks. This minimizes the potential impact of a security breach.

- Regular Security Audits: Conduct regular security audits of automated scripts and systems to identify and address vulnerabilities before they are exploited.

- Secure Storage of Credentials: Never hardcode sensitive credentials (passwords, API keys) directly into scripts. Use secure configuration management tools to store and manage these credentials securely.

- Using Strong Passwords: Employ strong, unique passwords for accounts used by automated scripts.

User Authentication in Automated Tasks

Secure user authentication is critical for controlling access to sensitive data and resources. Strong authentication mechanisms protect automated tasks from unauthorized access.

- Implementing Multi-Factor Authentication (MFA): Adding MFA to automated tasks enhances security by requiring multiple authentication factors, such as a password and a code from a security device or application.

- Using Secure Authentication Protocols: Employ secure authentication protocols, such as OAuth 2.0, to handle user authentication and authorization. This ensures that only authorized users can access sensitive resources.

- Regularly Updating Authentication Mechanisms: Keep authentication mechanisms up-to-date to address security vulnerabilities and exploit attempts.

Best Practices and Further Learning

Mastering automation requires not only understanding the tools but also adopting best practices for efficiency and maintainability. Proper code organization, comprehensive documentation, and ongoing learning are crucial for long-term success. This section details key strategies for building robust and scalable Python automation scripts.Effective Python automation scripts are built on the foundation of clear structure and maintainability. Employing these best practices ensures that your scripts remain usable and adaptable over time.

Best Practices for Writing Efficient and Maintainable Scripts

Following established best practices ensures that your scripts are easy to understand, modify, and debug. These principles also improve their performance and reliability.

- Modular Design: Break down complex tasks into smaller, manageable functions. This improves code readability and allows for easier testing and reuse of components. Modular design promotes code reusability and makes it easier to understand the logic of each part of the script. By separating concerns into individual functions, you create smaller, more focused units that can be tested independently, promoting maintainability and reducing the complexity of debugging.

- Meaningful Variable Names: Use descriptive names for variables and functions. This improves code readability and reduces the likelihood of errors. Choosing descriptive names directly reflects the purpose of the variables or functions, significantly enhancing the code’s clarity. This clear naming convention aids in understanding the script’s purpose and function at a glance.

- Comments: Add comments to explain complex logic or the purpose of specific sections. Well-placed comments are vital for maintainability. They serve as a roadmap, clarifying the intent behind code blocks and aiding in understanding intricate logic. This documentation enhances comprehension, especially for scripts that are reviewed or modified later.

- Error Handling: Implement robust error handling mechanisms to catch and manage potential issues gracefully. This is crucial for preventing unexpected program crashes and for providing informative error messages to the user. This ensures the script’s reliability by anticipating and handling potential problems, preventing program crashes and informing users about the source of errors.

Documentation and Code Organization

Comprehensive documentation is essential for maintaining and collaborating on automation scripts. Good code organization promotes readability and reduces the likelihood of errors.

- Docstrings: Include clear docstrings within functions to describe their purpose, parameters, and return values. Well-written docstrings act as a concise and comprehensive explanation of each function, promoting understanding and reusability. These are particularly useful when the script is modified or reviewed by others.

- Code Formatting: Use a consistent code style guide (e.g., PEP 8) for formatting your scripts. Consistent formatting makes the code easier to read and understand. Adhering to a style guide, like PEP 8, ensures consistency in the script’s appearance and enhances readability, improving the overall user experience.

- Version Control: Utilize version control systems (e.g., Git) to track changes to your scripts over time. This allows for easy rollback to previous versions if necessary and facilitates collaboration among multiple developers. This practice enables easy tracking of code modifications and ensures easy access to previous versions, proving invaluable in collaborative environments or when encountering unexpected issues.

Additional Resources for Learning More About Automation with Python

Exploring further resources can significantly enhance your automation skills.

- Online Courses: Numerous online platforms offer courses on Python automation, providing structured learning paths and hands-on exercises. These platforms offer interactive learning environments, enabling practical application of concepts.

- Python Documentation: Refer to the official Python documentation for comprehensive information on libraries and modules. This resource provides detailed explanations and examples, supporting practical implementation.

- Community Forums: Engage with online communities dedicated to Python automation to ask questions, share solutions, and learn from others’ experiences. Active participation in online communities facilitates knowledge sharing and problem-solving.

Optimizing Python Scripts

These tips help in optimizing Python scripts for improved efficiency and performance.

| Tip | Description |

|---|---|

| Use appropriate data structures | Employ efficient data structures like lists, dictionaries, or sets based on the task’s needs. |

| Vectorized operations | Leverage NumPy for numerical computations to improve performance. |

| Avoid redundant calculations | Store results of calculations to avoid repetitive computations. |

| Use generators | Use generators for memory-efficient processing of large datasets. |

| Profiling | Use profiling tools to identify performance bottlenecks. |

Essential Python Libraries for Automation

A concise summary of crucial Python libraries for automation tasks.

- `os`: Provides functions for interacting with the operating system.

- `shutil`: Offers high-level file operations.

- `pathlib`: Provides an object-oriented approach to file paths.

- `pandas`: Facilitates data manipulation and analysis.

- `requests`: Simplifies interaction with web APIs.

- `beautifulsoup4`: Extracts data from HTML and XML.

- `selenium`: Controls web browsers for dynamic interactions.

Final Wrap-Up

In conclusion, this guide has provided a thorough exploration of automating everyday tasks with Python. By mastering the techniques presented, you can significantly enhance your productivity and efficiency. Remember to prioritize security, handle errors gracefully, and optimize your scripts for maintainability. The examples and resources provided offer a solid foundation for further exploration and application in your own projects.

We encourage you to practice these skills and implement them in your daily routines.