This guide, “How to Get Started with Go (Golang) for High-Performance Apps,” provides a structured approach to leveraging the power of Go for building robust and high-performing applications. We’ll explore Go’s key features, from its elegant syntax and concurrency mechanisms to its efficient memory management, equipping you with the knowledge to craft applications that excel in performance and scalability.

From foundational concepts like data structures and algorithms to advanced techniques such as concurrency and I/O optimization, this comprehensive tutorial will walk you through the essential steps to master Go. We’ll delve into practical examples, real-world applications, and crucial best practices, ensuring a solid understanding of how to build high-performance applications in Go.

Introduction to Go (Golang)

Go, often referred to as Golang, is a statically-typed, compiled programming language designed at Google. It prioritizes simplicity, efficiency, and concurrency, making it an excellent choice for building high-performance applications, particularly in areas demanding speed and scalability. Its elegant syntax and robust standard library contribute to a productive development experience.Go’s core principles, including its emphasis on simplicity and concurrency, contribute significantly to its performance advantages.

This is achieved through features such as automatic garbage collection, efficient memory management, and a built-in concurrency model, all of which translate into faster execution times and reduced resource consumption.

Go Key Features

Go’s design emphasizes several key features that make it suitable for high-performance applications. These features contribute to its speed and efficiency.

- Concurrency Model: Go’s built-in concurrency model, leveraging goroutines and channels, allows for concurrent execution of tasks without the complexity of traditional threading. This concurrency model enables efficient use of multi-core processors, significantly enhancing application performance.

- Static Typing: Static typing, a feature of Go, helps catch errors early in the development process. This leads to more robust and reliable code. The compiler’s ability to optimize code during compilation also plays a role in performance enhancement.

- Automatic Garbage Collection: Go’s automatic garbage collection frees developers from manual memory management. This simplifies development and avoids common memory-related errors that can negatively impact performance.

- Compiled Language: As a compiled language, Go compiles source code directly into machine code. This results in faster execution speeds compared to interpreted languages. The compilation step ensures that the program runs efficiently.

Installation Guide

The installation process for Go is straightforward across various operating systems.

- Windows: Download the appropriate installer from the official Go website and follow the on-screen instructions. The installer handles the necessary configuration and installation.

- macOS: Download the appropriate package from the official Go website. Unpack the archive and add the Go binary to your system’s PATH environment variable for easy access from the command line.

- Linux: Download the appropriate package from the official Go website. Use the package manager or command-line tools to install Go, typically by unpacking the downloaded archive and adding the Go binary to your system’s PATH environment variable.

“Hello, World!” Example

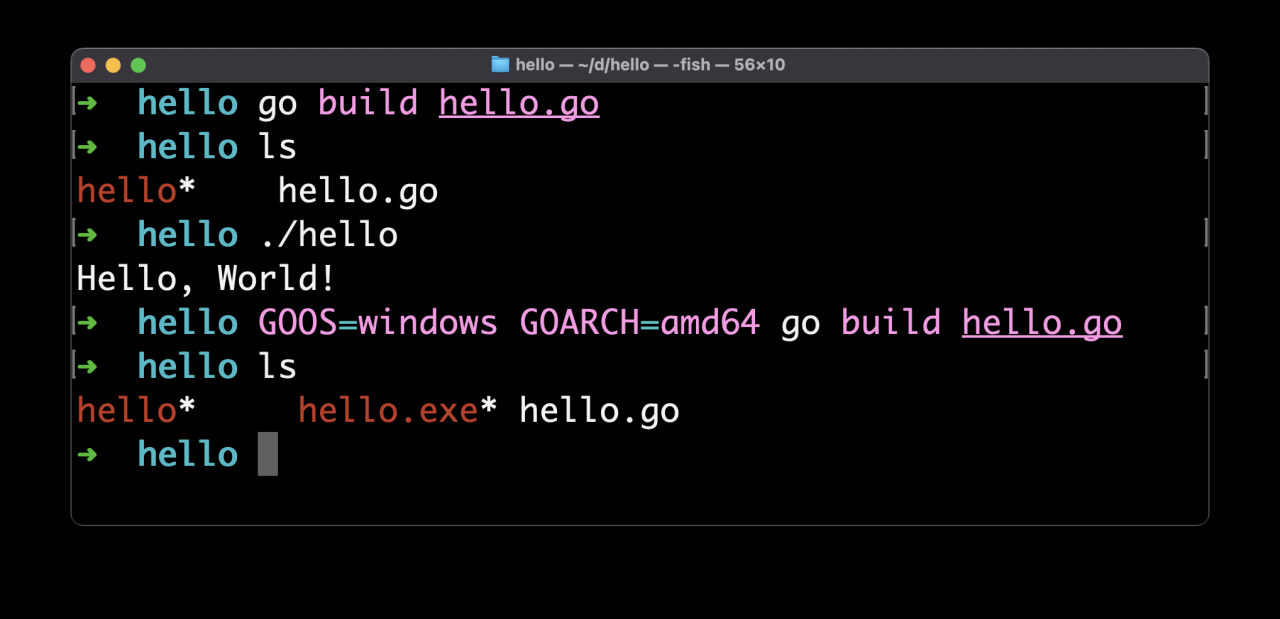

The following example demonstrates a basic “Hello, World!” program in Go, showcasing the language’s straightforward syntax.“`Gopackage mainimport “fmt”func main() fmt.Println(“Hello, World!”)“`This program utilizes the `fmt` package for formatted I/O and the `main` function as the entry point.

Performance Comparison

The following table provides a comparative analysis of Go’s performance against other popular programming languages. This focuses on execution speed. Note that performance can vary based on specific implementations and the nature of the application being developed.

| Language | Performance (General Impression) | Strengths |

|---|---|---|

| Go | Generally high | Excellent concurrency support, compiled nature, efficient memory management |

| Python | Generally moderate | Readability, extensive libraries |

| Java | Generally high | Platform independence, mature ecosystem |

| C++ | Generally high | Fine-grained control over memory, highly optimized |

Data Structures and Algorithms in Go

Go’s built-in data structures, coupled with its efficiency in handling memory, make it a powerful choice for high-performance applications. Understanding how to leverage these structures and algorithms effectively is crucial for optimizing application performance and handling large datasets efficiently. Choosing the right data structure for a specific task is vital; an inappropriate choice can significantly impact the speed and resource consumption of your application.Efficient data structures and algorithms form the bedrock of high-performance applications.

The correct selection of data structures can drastically influence the execution speed and memory footprint of your Go programs, particularly when dealing with extensive datasets. This section delves into the use of Go’s fundamental data structures (arrays, slices, maps) for performance optimization, alongside the implementation of common algorithms and their time complexities.

Built-in Data Structures and Optimization

Go provides several built-in data structures that are well-suited for various tasks. Properly utilizing these structures can significantly enhance performance.

- Arrays: Arrays in Go are fixed-size sequences of elements of the same type. Their fixed size is a characteristic that makes them suitable for scenarios where the size of the data is known beforehand. For instance, storing a predefined set of sensor readings at specific time intervals, or a table of predefined constants. Arrays offer direct access to elements using their index, resulting in fast lookup times, but their fixed size can be a limitation when dealing with dynamic data.

Modifying an array often involves creating a new array, which can be less efficient for large datasets compared to slices.

- Slices: Slices are dynamic arrays. They provide a powerful and flexible way to manage sequences of elements. Their dynamic size allows for growth and shrinkage as needed, which is advantageous when the amount of data isn’t fixed. Slices are frequently employed in scenarios like handling network packets or processing data from a stream. Slices can be more efficient for managing dynamic datasets than arrays, as they avoid the need to create new arrays for each change.

They also offer a more flexible approach to manipulating collections of data.

- Maps: Maps in Go are key-value pairs. They’re highly efficient for lookups, enabling quick access to values based on their associated keys. This is exceptionally useful for storing and retrieving data by unique identifiers or attributes. Think of a database-like lookup where you want to quickly find a user’s details based on their unique ID. Maps offer rapid access to data, making them suitable for applications requiring fast retrieval based on specific criteria.

Algorithm Implementation and Efficiency

Understanding and applying appropriate algorithms is crucial for effective programming.

- Sorting: Go’s `sort` package provides efficient sorting algorithms. Choosing the right sorting algorithm (e.g., quicksort, mergesort) for a specific task and dataset size is essential. For example, when sorting a large list of product names, a well-chosen sorting algorithm can minimize the time taken to arrange them alphabetically. The selection depends on the expected size of the data and the necessary level of performance.

- Searching: Searching algorithms like binary search can drastically reduce the time required to find an element in a sorted dataset. This is crucial for tasks involving finding specific records or information in a database. Binary search leverages the sorted nature of the data to significantly reduce the search space. For example, locating a particular customer record in a large database.

Handling Large Datasets

Go’s data structures excel in handling substantial datasets. Appropriate techniques for managing large volumes of data are necessary.

- Chunking: Breaking down large datasets into smaller, manageable chunks can improve efficiency. For example, processing a large file by reading and processing it in smaller blocks can significantly reduce memory usage and improve performance. This technique prevents loading the entire dataset into memory at once.

- Concurrency: Leveraging Go’s concurrency features can enhance performance when handling large datasets by dividing the work across multiple goroutines. This enables simultaneous processing of different parts of the data, drastically reducing the overall processing time. This is particularly beneficial for operations involving I/O-bound tasks or computationally intensive operations.

Time Complexity of Operations

This table provides a summary of the time complexities of various operations on Go’s built-in data structures.

| Data Structure | Operation | Time Complexity |

|---|---|---|

| Array | Access element by index | O(1) |

| Array | Append element | O(1) on average, O(n) in worst case (if reallocation is needed) |

| Slice | Access element by index | O(1) |

| Slice | Append element | O(1) on average, O(n) in worst case |

| Map | Access element by key | O(1) on average |

| Map | Insert element | O(1) on average |

Concurrency and Goroutines

Go’s concurrency model, leveraging goroutines and channels, is a powerful feature that enables high-performance applications by handling multiple tasks concurrently. This approach allows programs to perform operations simultaneously, leading to significant improvements in responsiveness and throughput, particularly in I/O-bound tasks. This section dives into the core concepts of concurrency and parallelism in Go, exploring how goroutines and channels facilitate concurrent programming.Concurrent programming in Go is distinct from parallel programming.

Concurrency allows multiple tasks to proceed seemingly at the same time, whereas parallelism involves multiple tasks truly executing simultaneously on multiple processors. Go’s concurrency model excels at managing concurrent tasks, offering efficient execution even on single-core systems.

Goroutines and Channels

Goroutines are lightweight, user-defined functions that run concurrently within the same address space. They are created easily and are managed by Go’s runtime scheduler, which efficiently distributes work across available processing units. Channels are communication mechanisms between goroutines. They enable safe and structured data exchange between concurrently executing parts of a program.

Concurrent Task Examples

This section presents examples of concurrent tasks handled effectively using goroutines and channels. A common scenario is web server processing. Multiple requests can be handled concurrently by launching goroutines to serve each request.

- Web Server Request Handling: A web server can receive numerous requests simultaneously. Each request can be handled by a dedicated goroutine. Channels can be used to manage the flow of requests and responses. This significantly improves the server’s ability to handle a high volume of requests without significant performance bottlenecks. This approach is essential for websites experiencing substantial traffic.

- Data Processing: Imagine a large dataset needing processing. Dividing the dataset into smaller chunks and assigning each chunk to a goroutine for independent processing can significantly reduce the overall processing time. Channels facilitate the gathering of results from each goroutine and merging them into a unified result. This approach is applicable in scenarios involving large datasets like image processing or financial data analysis.

Race Condition Management

Concurrent programming often necessitates managing goroutine synchronization to prevent race conditions. A race condition arises when multiple goroutines access and modify shared resources concurrently without proper synchronization mechanisms. This can lead to unpredictable and incorrect program behavior. Using channels and synchronization primitives like mutexes or atomic operations prevents such scenarios.

- Synchronization Primitives: Go’s built-in synchronization primitives, like mutexes, allow controlled access to shared resources. A mutex acts as a lock, ensuring only one goroutine can access a shared resource at a time, preventing race conditions. Atomic operations offer a way to perform operations on variables without the need for explicit locking. Proper use of these mechanisms is crucial for reliable concurrent programs.

Concurrent Request Processing

A program that utilizes goroutines to handle multiple requests concurrently demonstrates a substantial performance gain. The example below shows how to process multiple requests concurrently, highlighting the speedup achievable with this technique.“`Gopackage mainimport ( “fmt” “sync” “time”)func processRequest(id int) // Simulate some work time.Sleep(1

time.Second)

fmt.Printf(“Processing request %d\n”, id)func main() var wg sync.WaitGroup numRequests := 5 for i := 0; i < numRequests; i++ wg.Add(1) go func(requestId int) defer wg.Done() processRequest(requestId) (i) wg.Wait() fmt.Println("All requests processed.") ``` This program creates multiple goroutines, each handling a request. The `sync.WaitGroup` ensures all goroutines complete before the main program exits. This demonstrates how concurrent execution leads to faster processing of multiple requests compared to sequential execution. This example is adaptable to various concurrent request processing scenarios.

Memory Management and Optimization

Go’s memory management system, powered by automatic garbage collection, significantly simplifies development. This approach, while generally efficient, requires understanding its nuances to avoid performance bottlenecks in high-performance applications. Careful consideration of memory usage and optimization techniques is crucial for building robust and scalable Go programs.Go’s garbage collector (GC) automatically reclaims memory occupied by objects no longer referenced by the program.

This automatic management relieves developers from manual memory allocation and deallocation, minimizing the risk of memory leaks. However, understanding how the GC works and its impact on program performance is vital for effective application design.

Go’s Automatic Garbage Collection

Go’s garbage collector (GC) is a crucial aspect of memory management. It automatically reclaims memory occupied by objects that are no longer reachable from the program’s root set. This automatic process, while beneficial for developer productivity, can potentially introduce pauses in program execution. The frequency and duration of these pauses can vary based on factors like the size of the heap and the rate of object allocation.

A well-tuned application can minimize the impact of GC pauses on performance.

Identifying and Addressing Memory Leaks

Memory leaks in Go applications arise when memory is allocated but not released, leading to gradual resource depletion. Identifying memory leaks involves careful examination of memory usage patterns. Tools like profiling tools within Go’s development environment can help in pinpointing memory allocation hotspots. Understanding the allocation and usage of memory is critical in identifying and addressing these leaks.

Common causes include data structures that retain references to objects even after they are no longer needed, or unexpected circular references.

Memory Optimization Techniques

Efficient memory usage involves minimizing unnecessary allocations. Techniques like object pooling, where reusable objects are pre-allocated and managed, can significantly reduce the workload on the garbage collector. Employing efficient data structures, such as using slices instead of arrays when possible, is also beneficial. Data structures that minimize the number of allocations during runtime will contribute to higher application performance.

Appropriate data structures and algorithms can drastically reduce memory usage.

Examples of Memory-Intensive Operations and Optimization

Processing large datasets, particularly in scenarios involving frequent allocations and deallocations, can significantly impact performance. Consider using a single large buffer for data loading, instead of allocating smaller buffers repeatedly. Optimizing string manipulations within loops, by employing string concatenation techniques that avoid unnecessary allocations, can also be crucial. Optimizing algorithms to reduce the amount of data that needs to be processed, or employing algorithms with better time complexity, can also improve memory efficiency.

Memory Management Strategies

| Strategy | Description | Potential Impact on Performance |

|---|---|---|

| Automatic Garbage Collection | The Go runtime manages memory allocation and deallocation automatically. | Generally efficient, but pauses can occur during garbage collection cycles. |

| Manual Memory Management (using `C` extensions) | Allows for fine-grained control over memory allocation and deallocation. | Potentially higher performance in specific cases, but requires careful management to avoid leaks. |

| Object Pooling | Pre-allocate and reuse objects to reduce allocations. | Significant performance gains in applications with frequent object creation. |

Manual memory management, while providing potential performance advantages in some scenarios, carries a higher risk of memory leaks and requires careful management. Object pooling, when applied appropriately, can significantly enhance performance by reducing the overhead of frequent allocations.

Input/Output Operations

Efficient file handling is crucial for any high-performance application. Go’s built-in `io` package provides a robust framework for interacting with files and other input/output streams. Understanding these operations, especially when dealing with large datasets, is essential for optimizing performance.

File I/O Operations in Go

The `os` package in Go provides functions for basic file operations, such as creating, reading, and writing files. The `io` package offers more versatile and efficient methods for interacting with input/output streams, particularly beneficial for managing large datasets. These tools enable the creation of high-performance applications.

Reading Data from Files

Reading data from files involves opening the file, reading the content, and closing it. Correctly handling errors is paramount, especially when dealing with potentially large or unreliable files.

- Using `os.Open` to open a file safely: This function returns an error, allowing you to handle potential issues like the file not existing or being inaccessible. The function takes the filename as input. For instance, `file, err := os.Open(“mydata.txt”)` opens the file named “mydata.txt” and stores the result in the variable `file`. The variable `err` stores any error encountered.

- Reading data using `io.Reader`: The `io.Reader` interface is crucial for reading data from various sources, not just files. The `bufio.NewReader` function is often preferred for efficiency when dealing with large files, providing buffered input.

- Example of reading a file line by line using a `for` loop: This illustrates how to read data from a file line by line, handling errors appropriately.

“`Go

package mainimport (

“bufio”

“fmt”

“os”

)func main()

file, err := os.Open(“mydata.txt”)

if err != nil

fmt.Println(“Error opening file:”, err)

returndefer file.Close() // Ensures the file is closed even if errors occur

scanner := bufio.NewScanner(file)

for scanner.Scan()

fmt.Println(scanner.Text())if err := scanner.Err(); err != nil

fmt.Println(“Error reading file:”, err)“`

Writing Data to Files

Writing data to files involves opening the file for writing, writing the content, and closing it. Error handling is equally important in writing operations.

- Using `os.Create` to create a file: This function creates a new file for writing. Similar to `os.Open`, it returns an error for potential issues.

- Writing data using `io.Writer`: The `io.Writer` interface provides a flexible way to write data to various destinations, including files. Buffered writing using `bufio.NewWriter` is frequently employed for performance gains with large files.

- Example of writing data to a file: This demonstrates how to write data to a file, handling potential errors.

“`Go

package mainimport (

“bufio”

“fmt”

“os”

)func main()

file, err := os.Create(“output.txt”)

if err != nil

fmt.Println(“Error creating file:”, err)

returndefer file.Close()

writer := bufio.NewWriter(file)

writer.WriteString(“This is some data.\n”)

writer.Flush() // Crucial to ensure data is written to the file.“`

Optimizing File I/O Performance

Several techniques can be employed to enhance the performance of file I/O operations, particularly for large files. Buffered I/O is a key optimization strategy.

- Employing buffered I/O: Using buffered I/O operations, such as `bufio.Reader` and `bufio.Writer`, significantly reduces the number of system calls. This leads to a notable performance improvement.

- Asynchronous I/O with Channels: Channels provide a mechanism for asynchronous I/O, allowing the program to perform other tasks while waiting for I/O operations to complete. This is particularly beneficial for high-performance applications.

Asynchronous I/O with Channels

Leveraging channels allows Go’s concurrency capabilities to be fully exploited for handling I/O operations asynchronously.

- Creating a channel for receiving the result of an I/O operation: This demonstrates how to use a channel to receive the output of an I/O operation. The function that performs the I/O operation sends the result on the channel.

- Example of asynchronous file reading using channels: This example shows how to read a file asynchronously using goroutines and channels. It demonstrates how to process data while the I/O operation is in progress.

Large File Processing Example

This example demonstrates how to efficiently process a large file, focusing on optimized I/O techniques.

Testing and Benchmarking

Thorough testing and benchmarking are crucial for building robust and high-performing Go applications. They ensure code correctness, identify potential performance bottlenecks, and facilitate the development of reliable software. Effective testing practices streamline the development process, reducing debugging time and improving code quality.Testing and benchmarking in Go are integral to ensuring that the software behaves as expected and meets performance requirements.

This includes verifying individual components (unit tests) and evaluating overall application speed (benchmarks). A comprehensive approach to testing is critical for maintaining software quality, especially in demanding high-performance contexts.

Importance of Testing and Benchmarking

Rigorous testing is essential for detecting and preventing bugs early in the development cycle. This proactive approach significantly reduces the cost and time associated with debugging and fixing issues later. Benchmarking is vital for optimizing performance and ensuring that the application meets the required speed and efficiency criteria.

Writing Unit Tests in Go

Unit tests isolate individual components of a Go program, allowing developers to verify their functionality independently. The `testing` package provides tools to facilitate this process. A typical unit test setup includes test functions that are prefixed with `Test` and take a `*testing.T` object as an argument.

Example of a Unit Test

“`Gopackage mypackageimport ( “fmt” “testing”)func CalculateSum(a, b int) int return a + bfunc TestCalculateSum(t

testing.T)

result := CalculateSum(2, 3) if result != 5 t.Errorf(“Expected 5, but got %d”, result) func TestCalculateSumError(t

testing.T)

result := CalculateSum(-2, 3) if result == 5 t.Errorf(“Expected not 5, but got %d”, result) “`This example demonstrates a simple unit test for a `CalculateSum` function. It checks if the function returns the correct sum for a given input. The `TestCalculateSumError` function is used to test an error case.

Benchmarking Code Performance

Benchmarking allows developers to measure the execution time of specific code segments. This data helps identify performance bottlenecks and guide optimization efforts. The `testing` package’s `Benchmark` functions are used for benchmarking.

Example of a Benchmark

“`Gopackage mypackageimport ( “testing”)func BenchmarkCalculateSum(b

testing.B)

for i := 0; i < b.N; i++ CalculateSum(2, 3) ``` This code snippet demonstrates a benchmark for the `CalculateSum` function. The `b.N` variable is used to control the number of iterations for measuring the execution time.

Different Testing Strategies

Various testing strategies can be employed for different types of Go applications. These strategies can include:

- Integration testing: This strategy verifies the interaction between different modules or components of an application.

- End-to-end testing: This method tests the complete flow of an application from input to output, covering all interactions with external systems.

- Performance testing: This focuses on measuring the application’s performance under different loads, stress, and other constraints.

Each strategy plays a crucial role in ensuring a robust and well-performing application.

Testing Frameworks Comparison

| Framework | Advantages |

|---|---|

| Go’s built-in testing package | Simple, lightweight, and integrated directly into the Go toolchain. |

| testify | Provides a richer set of assertions, helper functions, and better error handling than the standard library. |

| ginkgo | Supports BDD-style testing, allowing developers to write more readable and maintainable tests. |

This table presents a concise comparison of various testing frameworks available for Go. Each framework offers distinct advantages, and the best choice depends on the specific needs of the project.

Building and Deploying High-Performance Go Applications

Go’s efficiency and concurrency features make it ideal for building high-performance applications. This section delves into the crucial steps of building and deploying these applications, focusing on techniques to optimize performance throughout the process. From choosing the right build tools to deploying on various platforms, we’ll explore best practices to ensure your Go applications are not only functional but also highly performant.Effective deployment strategies are essential for maintaining application performance, especially in production environments.

Careful consideration of build tools, packaging, and deployment methods is vital to ensure scalability, reliability, and optimal resource utilization.

Build Tools and Techniques

Building Go applications involves several tools and techniques, each with its own strengths and use cases. Understanding these tools allows for more effective development and deployment. The most common tools for building Go applications are:

- `go build`: The standard Go build tool is highly efficient and integrated with the Go development environment. It compiles Go source code into executable binaries. Its simplicity makes it ideal for small projects and quick iterations.

- `go install`: This tool installs the built binaries in the specified location, usually the `$GOPATH/bin` directory. It’s often used for building and installing packages and libraries within the Go project.

- `go mod`: The `go mod` tool facilitates the management of Go modules. It handles dependencies, making it crucial for projects involving multiple packages and libraries. Using `go mod` improves build consistency and ensures all necessary dependencies are available during compilation.

- `make`: For more complex projects, `make` scripts can be used to automate the build process. They define build rules, enabling customization and flexibility for handling diverse dependencies and configurations.

Packaging and Distribution

Packaging and distributing Go applications involve several approaches, each suited for specific use cases and project sizes. Effective packaging ensures easy deployment and portability across different systems.

- Binary Distribution: The most straightforward approach is to package the compiled executable as a standalone binary. This is suitable for applications requiring minimal dependencies. Deployment involves simply transferring the binary to the target system and running it.

- Using a Package Manager: Tools like `go install` and package managers like `dep` or `glide` manage dependencies. They help with creating self-contained packages, simplifying distribution by including all necessary dependencies. This approach is often preferred for larger projects.

- Containerization with Docker: Encapsulating the application within a Docker container provides a consistent runtime environment. This ensures the application runs predictably across various systems. Docker images contain the application, its dependencies, and the runtime environment, facilitating consistent deployment and scaling.

Deployment on Various Platforms

Deploying Go applications on various platforms involves several strategies, with cloud environments being a common target. Effective deployment ensures the application’s performance and availability.

- Cloud Servers (AWS, Azure, GCP): Cloud platforms provide scalable infrastructure. Deploying to cloud servers involves configuring instances, setting up networking, and deploying the application. Cloud-based deployments offer scalability and resilience.

- Virtual Machines (VMs): VMs provide isolated environments for running applications. Deployment to VMs involves setting up the VM, installing necessary software, and deploying the application. VM deployment is suitable for applications requiring a dedicated environment.

- Dedicated Servers: Dedicated servers offer more control and customization. Deployment involves installing the operating system, configuring the server, and deploying the application. This option is suitable for applications requiring significant control and resources.

Optimizing Deployment Process

Optimizing the deployment process for high performance involves several key considerations, aiming for efficiency and speed.

- Caching: Cachings, such as using a CDN or Redis, for frequently accessed data can significantly improve application performance by reducing the load on the server. This speeds up response times for users.

- Automated Deployment: Employing CI/CD pipelines automates the deployment process. This reduces manual intervention, minimizes errors, and improves deployment speed.

- Monitoring: Implementing comprehensive monitoring tools allows for real-time performance tracking. This facilitates rapid identification of potential bottlenecks and performance issues.

“A well-defined deployment process, leveraging automation and optimization techniques, is crucial for maintaining high performance in Go applications. This includes utilizing build tools efficiently, packaging applications appropriately, and deploying on platforms that match the application’s requirements.”

Real-World Examples

Go’s performance characteristics make it suitable for a wide range of high-performance applications. Its concurrency features, efficient memory management, and static typing contribute to its ability to handle demanding workloads effectively. This section will showcase real-world examples of Go’s use in high-performance computing, highlighting its advantages and addressing potential challenges.Go’s strengths lie in its ability to build highly concurrent applications with ease, making it well-suited for tasks requiring parallel processing.

This is evident in numerous real-world applications, from web servers to data pipelines, where leveraging multiple cores for faster processing is crucial.

High-Performance Web Servers

Go’s concurrency model, combined with its efficient networking libraries, makes it a compelling choice for building high-performance web servers. The net/http package provides a robust foundation for handling concurrent requests. For instance, popular web frameworks like Gin and Echo leverage Go’s goroutines and channels to handle thousands of concurrent connections without significant performance degradation. This results in responsive and scalable web applications.

Data Processing Pipelines

Go excels in handling data processing pipelines due to its ability to process data concurrently and efficiently. Its robust standard library and third-party packages facilitate the development of complex data pipelines. Consider a large-scale log processing system: Go’s goroutines can process log entries concurrently, allowing for real-time analysis and filtering. This capability translates into substantial performance improvements compared to traditional, single-threaded approaches.

The concurrent processing of data reduces latency and increases throughput, allowing the pipeline to handle massive datasets effectively.

High-Performance Computing (HPC) Environments

Go is gaining traction in HPC environments, where computational tasks often require substantial processing power. Its efficiency in handling concurrent computations makes it a strong contender for parallel computing. For instance, in scientific simulations or large-scale data analysis, Go can be employed to parallelize tasks, leading to significant reductions in computation time.

Challenges and Solutions in High-Performance Use Cases

While Go is well-suited for high-performance applications, some challenges can arise. One common concern is memory management, particularly in scenarios with large datasets. Solutions include careful data structure selection, efficient memory allocation techniques, and proper use of garbage collection. Properly utilizing Go’s concurrency features (goroutines and channels) is crucial, but excessive concurrency can lead to performance bottlenecks.

Solutions involve careful consideration of the problem’s parallelism characteristics and efficient resource allocation. Profiling and benchmarking are crucial for identifying and addressing potential performance issues.

Architecture of a High-Performance Go Web Server

A high-performance Go web server often employs a layered architecture. The first layer typically involves handling incoming requests using the `net/http` package. Concurrent requests are handled by goroutines, which can be managed by a worker pool. This architecture enables the server to respond to multiple requests simultaneously. The second layer often involves routing requests to specific handlers, which perform the requested operations.

These handlers can utilize various database interactions or other external services, which should be handled with optimized connections to avoid bottlenecks. The third layer often involves data serialization and response generation. A well-designed Go web server employs these layers to handle requests efficiently and ensure high performance.

End of Discussion

In conclusion, this guide has provided a comprehensive overview of getting started with Go for high-performance applications. By understanding Go’s core principles, data structures, concurrency capabilities, memory management, and I/O techniques, you are well-equipped to build and deploy high-performing applications. The emphasis on testing and benchmarking ensures quality and performance throughout the development lifecycle. This guide provides a strong foundation to embark on your Go development journey.